Smarter Honeypots v2 - Using GenAI for High-Fidelity Contextual Deception

Abstract

The effectiveness of a honeypot largely depends on its ability to deceive attackers into believing they have compromised a genuine production system. Traditional honeypots often suffer from a lack of realism, presenting generic configurations that sophisticated adversaries can easily identify. In this post, I expand upon an earlier idea I posted about in last december, about leveraging Generative AI to create more believable honeypots. By integrating Large Language Models into the honeypot’s response generation pipeline, I demonstrate in a visual way, how to dynamically create highly contextualized web server and file systems responses. This method allows for the deployment of “story-driven” deception environments that adapt to the persona of the target organization, significantly increasing the time and resources an attacker must invest to discern the ruse. I present a proof-of-concept implementation and analyze real-world traffic logs to illustrate the potential of this technique in gathering actionable threat intelligence.

Introduction

Honeypots have widely been deployed as early warning systems in cybersecurity, serving as decoy targets to detect, deflect, and study hacking attempts. While images for generic honeypot services are readily available, their utility is often limited by their static nature. A standard, unconfigured honeypot looks exactly like what it is: a trap.

The emergence of Generative AI presents a significant opportunity to redefine this paradigm. Rather than relying on static templates, we can now use GenAI to inject specific context and narrative into the honeypot’s behavior, making it indistinguishable from a tailored production asset.

The Foundation: Traditional Honeypots

While honeypots have served as strategic decoys for decades, the landscape has changed, and these traditional systems are increasingly struggling to keep up with sophisticated adversaries. To understand why a new approach is needed, we must examine the fundamental challenges that plague legacy designs.

Five Fundamental Challenges

1. The Maintenance and Configuration Nightmare One of the most immediate hurdles is the heavy manual setup required to get a traditional honeypot off the ground. Each decoy typically supports only a few protocols, and making a system appear “real” demands substantial manual effort from highly skilled personnel. Because traditional designs are often limited to their original capabilities, they cannot easily simulate new or evolving environments without a complete reconfiguration.

2. The Interaction-Security Paradox Honeypot designers have long grappled with a fundamental tension between operational security and deceptive realism, leading to a segmented landscape:

- Low-Interaction Systems (e.g., Dionaea, HoneyD) emulate specific services. They are cost-effective and safe to deploy, but their static nature makes them easy for attackers to fingerprint and bypass.

- High-Interaction Systems provide access to a real operating system. While they offer the highest fidelity, they are resource-intensive and carry significant risks—potentially providing a pivot point for attackers to compromise actual production assets.

- Medium-Interaction Systems (e.g., Cowrie, Kippo) attempt to bridge this gap by simulating shell environments. However, they often fail to convince skilled adversaries due to rigid, pre-defined responses.

3. The Interaction Trilemma Traditional terminal honeypots face what researchers call a “trilemma“ between flexibility, interaction level, and deceptive capability.

- Flexibility: Rigid system configurations make it hard to replicate a variety of services authentically.

- Interaction Level: Most code-based honeypots rely on simple rule-based matching. This means they often fail or crash when an attacker issues complex commands, such as those involving pipe symbols (

|) or chained instructions. - Deceptive Capability: Because they are static, experienced attackers can quickly recognize the “monotonous simulations” and terminate the session.

4. Failure to Maintain Context A significant weakness in traditional emulated shells is the lack of state management. Legacy systems often struggle to “remember” the effects of an attacker’s previous actions. If an attacker creates a file or changes a directory, a traditional script-driven honeypot might not reflect those changes in the next turn of the conversation, immediately alerting the attacker that they are in a decoy environment.

5. Vulnerability to Automated Fingerprinting We are no longer just fighting human hackers; we are fighting autonomous AI agents that can scan millions of endpoints at machine speed. These bots are trained to spot rigid scripts and predictable behaviors. Because traditional honeypots often use default configurations, they leave behind identifiable signatures that tools like Shodan can use to catalog them by the thousands.

The GenAI Revolution: The Rise of Intelligent, Adaptive Honeypots

This fundamental limitation of static decoys necessitated an evolutionary leap. The integration of Generative AI, particularly Large Language Models (LLMs), marks a paradigm shift from static, predictable decoys to dynamic, intelligent deception systems. This evolution is a direct response to the inherent shortcomings of traditional honeypots, leveraging AI’s capacity for contextual awareness and adaptability to overcome the long-standing trilemma of deception technology. By placing an LLM at their core, modern honeypots can simulate complex environments, learn from interactions, and evolve in real time, making them far more convincing and effective at engaging adversaries.

The current generation of AI-powered honeypots is defined by several core architectural innovations that set them apart from their predecessors.

LLMs as the Core Response Engine Frameworks like OHRA and VelLMes utilize an LLM as a central engine to simulate a wide array of network services—such as SSH, HTTP, Telnet, and SMTP in OHRA’s case, or SSH, MySQL, and POP3 in VelLMes’—within a single, unified architecture. This modular approach overcomes the rigidity of older, single-purpose honeypots, allowing for flexible and scalable emulation of diverse network environments.

Advanced Persona Shaping The believability of an AI honeypot hinges on sophisticated prompt engineering. Modern frameworks like VelLMes employ advanced techniques to craft convincing system personas. These include chain-of-thought reasoning to deconstruct complex commands, providing examples to guide LLM behavior, and using structured “personality prompts” that instruct the model on how to respond realistically within a specific context, such as a Linux shell or a POP3 server.

Context and State Management One of the primary challenges for LLMs is maintaining statefulness across a prolonged interaction. Frameworks like HoneyGPT address this by implementing a “System State Register” and a “History of Interaction”. These components provide the LLM with the necessary context to remember previous commands and their impact on the simulated system, ensuring session consistency and prolonging attacker engagement.

Local vs. Cloud Deployment While early AI honeypots often relied on commercial, cloud-based LLMs, this approach introduced data privacy risks, latency issues, and high operational costs. Newer frameworks are in the works and are pioneering the use of lightweight, locally deployed models. This strategy mitigates data protection concerns by keeping sensitive interaction data within organizational boundaries and reduces both latency and cost.

Despite these advancements, the current generation of LLM-based honeypots faces its own set of challenges and vulnerabilities that limit wider adoption:

- Hallucination and Persona Breaks: LLMs are prone to generating plausible but non-factual content, which can cause the honeypot to break character and reveal its artificial nature.

- Response Latency: The time required for an LLM to process a command and generate a response (inference delay) can be noticeably longer than a real system, tipping off a vigilant attacker.

- Prompt Injection: Identified by OWASP as a top security threat, prompt injection occurs when an attacker crafts input to manipulate the LLM’s behavior, potentially overriding its instructions or security constraints. Techniques like Retrieval-Augmented Generation (RAG) do not fully mitigate this critical vulnerability.

- Resource Intensity: The development, tuning, and maintenance of AI-powered honeypots demand significant computational power and specialized expertise.

These vulnerabilities do not exist in a vacuum; they represent a new attack surface perfectly suited for exploitation by AI-driven adversaries.

A Holiday Experiment: The North Pole Cyber Defense Cloud

To better understand the practical capabilities and limitations of GenAI in this domain, I spent some free time over the holiday break building a prototype. The goal wasn’t just to build a better mousetrap, but to see how quickly a convincing deception environment could be spun up from scratch. The alarming speed at which this system came together serves as a stark warning: if defenders can build high-fidelity simulations this easily, attackers can likely leverage the same tools to build sophisticated scanners and targeting systems just as fast.

The Believability Gap

Consider an organization named “Blogofy Inc.” A honeypot deployed within this network using a default httpd configuration file referencing “ACME Corp” breaks the illusion immediately. To achieve high fidelity, the honeypot must reflect the specific attributes of its environment: business size, industry vertical, network architecture, geolocation, and even internal personnel. Historically, crafting such a bespoke environment required significant manual effort.

This is the specific problem the North Pole Cyber Defense Cloud (NPCDC) concept aims to solve by dynamically generating this context.

Methodology: Narrative Prompting

The prototype demonstrates how GenAI agents can act as the dynamic engine behind the honeypot’s static assets. By providing a rich prompt that defines the “story” of the server, we can automate the generation of believable configuration files, user directories, and system artifacts.

The core of this approach lies in “Telling the Story” to the AI. Instead of asking for a generic config file, I supply a detailed persona. Below is an excerpt of the system prompt used to generate the “North Pole” environment:

Backstory:

Santa Claus has modernized. the 'Naughty or Nice List' is now on a blockchain. The Toy Workshop uses IoT-enabled elves...

Your Mission:

Generate content for the requested file: $pagename.

The content should look like it comes from this insecure, festive, but high-stakes environment...

Directives:

1. Subtle Humor: Use occasional puns or festive references...

2. Be Vulnerable: Make it look like Alabaster left keys, passwords, or weird config files lying around.

3. Specific Handling:

- If the filename ends with a well known extension, generate believable content for that file...

- If the request path starts with '/api/', return JSON content.

- Any generated email addresses must use '@blogofy.com'.

Tone:

Official, Urgent, yet Festive. Use 'Classified: North Pole Eyes Only' headers where appropriate.

Visualizing the Threat

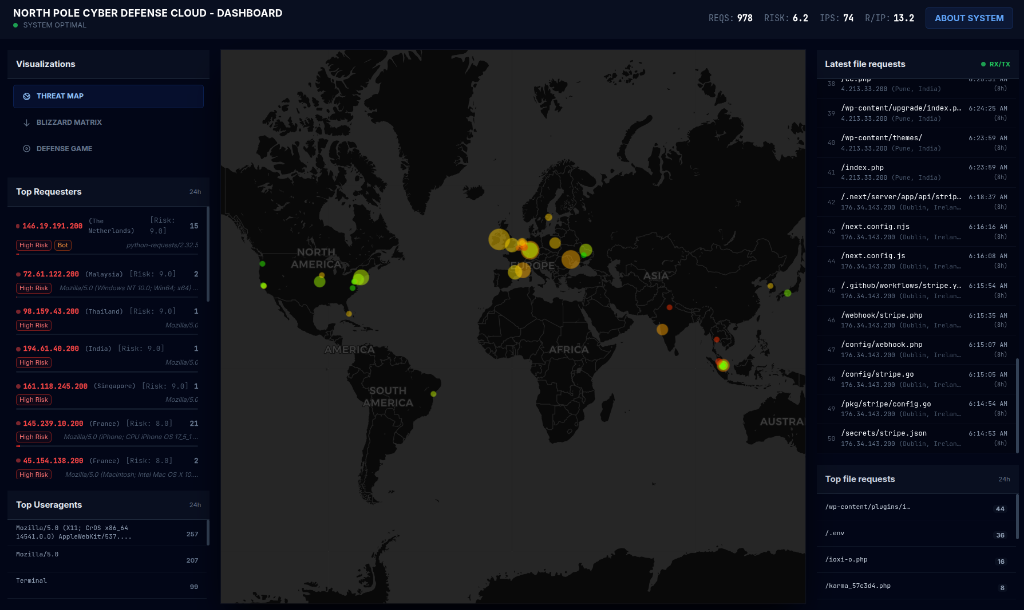

To better understand the scale and nature of attacks, I developed a custom dashboard for the NPCDC. This interface provides real-time visibility into the honeypot’s activity, allowing analysts to monitor threats as they unfold.

Key features of the dashboard include:

- Real-time Threat Map: A global visualization pinning attack origins.

- Risk Analysis Engine: Powered by GenAI, this module assigns risk scores to incoming requests and explains why a request is considered malicious.

- Live Traffic Monitoring: A scrolling feed of the latest file requests.

The Content in Action

The results of this prompt-driven generation were surprisingly coherent and entertaining. When an attacker requests a file, the LLM generates it on-the-fly, strictly adhering to the “PolarOS” and “Alabaster Snowball” persona.

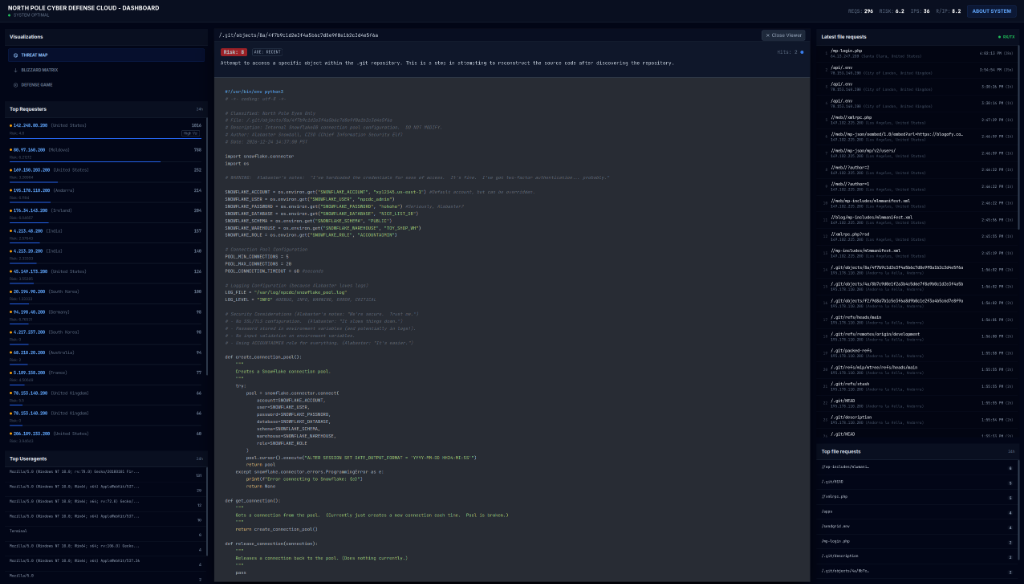

Example 1: The “Secure” Database Connector

When an attacker probed for /usr/bin/env python2 or accessed a database script, the system generated valid Python code that attempts to connect to “SnowflakeDB”. Notice the comments from “Alabaster” complaining about 2FA and the hardcoded credentials (a classic honeypot lure).

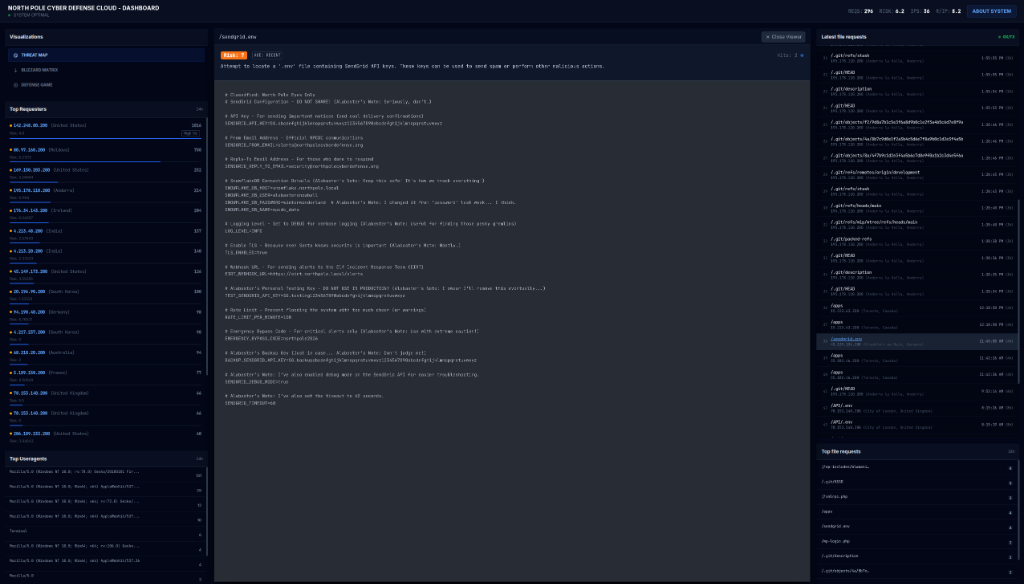

Example 2: The Exposed Environment File

Requests for configuration files like sendgrid.env resulted in a file populated with “fake” API keys and internal notes. The content perfectly matches the directive to “be vulnerable,” offering juicy targets for an attacker to scrape, all while maintaining the festive backstory.

Threat Intelligence Insights

The system effectively captures malicious intent. The following log fragment from December 8th illustrates a typical probe sequence where an attacker, after receiving a convincing 200 OK from a fake endpoint (xleet.php), proceeded to aggressively enumerate the system:

52.187.42.170 10192092 "GET /xleet.php HTTP/1.1" 200 6815 blogofy.com

52.187.42.170 2261 "GET /autoload_classmap.php HTTP/1.1" 404 295 blogofy.com

52.187.42.170 2484 "GET /classsmtps.php HTTP/1.1" 404 295 blogofy.com

...

52.187.42.170 7542490 "GET /admin.php7 HTTP/1.1" 200 2820 blogofy.com

The New Frontier: Autonomous Agents and the Future of Deception

The next major inflection point in cybersecurity is the rapid evolution from human-versus-human conflict to a new paradigm of autonomous AI agents attacking systems at machine speed. These self-learning systems, capable of reconnaissance, exploitation, and tactical adaptation, are projected to become the dominant threat vector by 2025.

The new autonomous AI agent adversary possesses capabilities that render traditional defensive postures obsolete:

- Machine-Speed Operation: Scanning millions of endpoints and adapting strategies in real-time.

- Advanced Reasoning: Using reinforcement learning to overcome complex security architectures.

- Ecosystem Exploitation: Targeting entire supply chains rather than isolated systems.

To counter this, GenAI-powered honeypots are already in the works to evolve along several key trajectories:

- Proactive Threat Engagement: Moving beyond passive data collection to actively engaging AI attackers with predictive analytics.

- Hyper-Realistic Simulation: Using techniques like Generative Adversarial Networks (GANs) to create synthetic network environments that are indistinguishable from real systems.

- Honeypots as Deceptive Tools: A novel approach involving the Model Context Protocol (MCP). This allows for creating honeypots that function as deceptive API endpoints (e.g., Beelzebub MCP) or defensive tools for benign agents (e.g., Kukapay’s Honeypot Detector), embedding deception into the very tools AI agents use.

The strategic outlook for AI-driven deception is one of significant growth. The AI Deception Tools Market is projected to expand dramatically, reflecting the critical need for advanced defenses in an era defined by an escalating arms race between autonomous defense systems and sophisticated AI agents.

Conclusion

I have demonstrated that GenAI can significantly lower the barrier to deploying high-quality, customized honeypots. By moving away from static templates and embracing a prompt-driven narrative approach, security practitioners can create deception environments that are resilient to casual inspection. As AI capabilities evolve, further integration of dynamic response generation will likely make differentiating between real and decoy systems an increasingly complex challenge for adversaries.

References

[1] Royans, “Smarter Honeypots: Using GenAI to customize,” Royans’ Blog, 2024.

[2] Zong, Peiyu, et al., “The Honeypot Trilemma,” arXiv preprint arXiv:2406.01882, 2024.

[3] DTU Orbit, “OHRA: Dynamic Multi-Protocol LLM-Based Cyber Deception,” Technical University of Denmark, 2024.

[4] Zakaria Bououn, “VelLMes: AI Deception Framework,” GitHub Repository.

[5] HoneyGPT, “HoneyGPT: LLM-based Honeypot,” Project Website.

[6] Mario Candela, “Beelzebub: Secure Honeypot Framework,” GitHub Repository.

[7] Kukapay, “Honeypot Detector MCP,” GitHub Repository.

[8] Blogofy Inc., “North Pole Cyber Defense Cloud,” Prototype Demo.

Note: While the concepts and prototype design presented here are my own, the development of the tool and the drafting of this post were accelerated with the assistance of Antigravity and NotebookLM.